Hey guys! In the first two posts about Azure Files, I initially explained what Azure Files is (Click here to read) and also explained what would be the simplest way of configuring it, using the storage account’s access key (Read this post here).

When on-premises AD authentication is enabled for Azure Files, your AD domain-joined machines, regardless of whether they are in Azure or on-premises, will be able to use Azure Files using their existing AD credentials.

Prerequisites

- Before you enable AD DS authentication for Azure file shares, make sure you have completed the following prerequisites:

- Select or create your AD DS environment and sync it to Azure AD with Azure AD Connect.

- You can enable the feature on a new or existing on-premises AD DS environment. Identities used for access must be synced to Azure AD. The Azure AD tenant and the file share that you are accessing must be associated with the same subscription.

- Domain-join an on-premises machine or an Azure VM to on-premises AD DS. For information about how to domain-join, refer to Join a Computer to a Domain.

- If your machine is not domain joined to an AD DS, you may still be able to leverage AD credentials for authentication if your machine has line of sight of the AD domain controller.

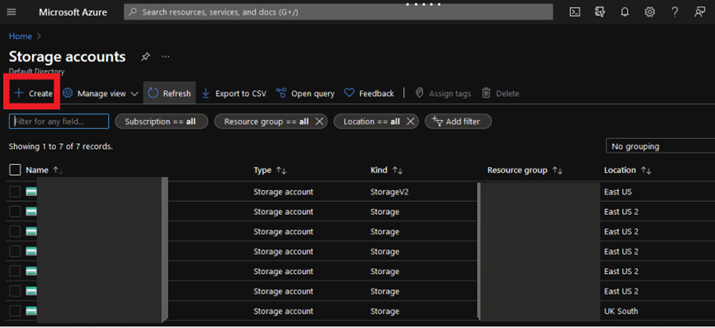

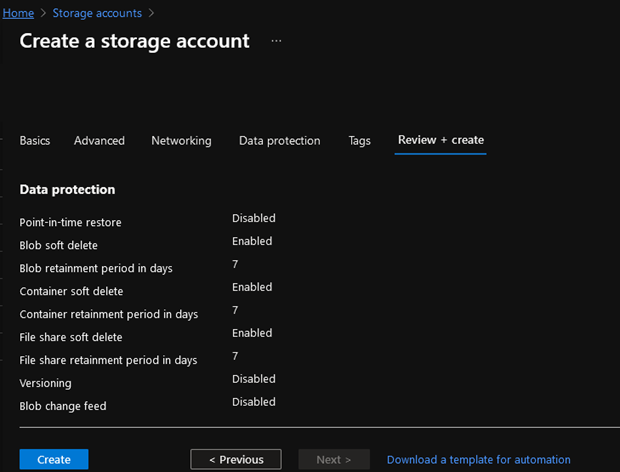

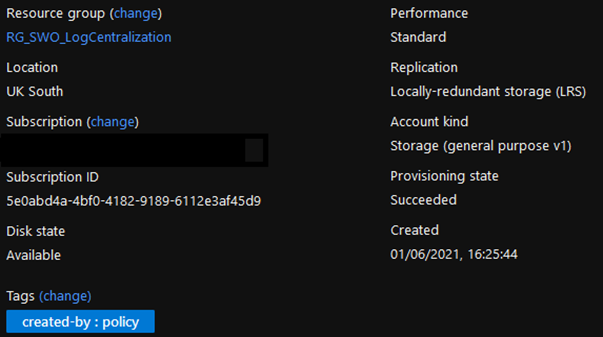

- Select or create an Azure storage account. For optimal performance, we recommend that you deploy the storage account in the same region as the client from which you plan to access the share. Then, mount the Azure file share with your storage account key. Mounting with the storage account key verifies connectivity.

- Make sure that the storage account containing your file shares is not already configured for Azure AD DS Authentication. If Azure Files Azure AD DS authentication is enabled on the storage account, it needs to be disabled before changing to use on-premises AD DS. This implies that existing ACLs configured in Azure AD DS environment will need to be reconfigured for proper permission enforcement.

- Make any relevant networking configuration prior to enabling and configuring AD DS authentication to your Azure file shares. See Azure Files networking considerations for more information.

- If you don’t have .Net Framework 4.7.2 installed, install it now. It is required for the module to import successfully.

- Download and unzip the AzFilesHybrid module (GA module: v0.2.0+). Note that AES 256 kerberos encryption is supported on v0.2.2 or above. If you have enabled the feature with a AzFilesHybrid version below v0.2.2 and want to update to support AES 256 Kerberos encryption, please refer to this article.

- Install and execute the module in a device that is domain joined to on-premises AD DS with AD DS credentials that have permissions to create a service logon account or a computer account in the target AD.

- Run the script using an on-premises AD DS credential that is synced to your Azure AD. The on-premises AD DS credential must have either Owner or Contributor Azure role on the storage account.

Source: https://docs.microsoft.com/en-us/azure/storage/files/storage-files-identity-auth-active-directory-enable and https://docs.microsoft.com/en-us/azure/storage/files/storage-files-identity-ad-ds-enable.

Ok, Let’s get started.

The process of enabling your Active Directory authentication for Azure FIles is to join the storage account that you used to create the file share to your Active Directory. When you enable AD authentication for the storage account, it applies to all new and existing Azure file shares.

Step-by-step

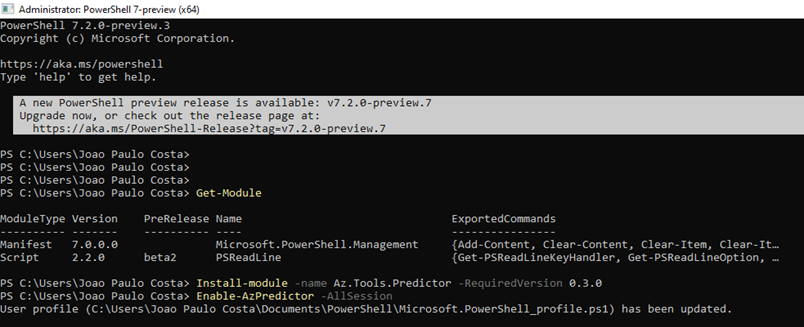

First you will need to download this script, basically it is a module you will need to add to your powershell that will be used to enable “hybrid” Active Directory. To be honest, it will be a very simple task, basically you will need to follow the steps described in the text file that is inside the zip file.

Invoke-WebRequest -Uri $Url -OutFile “C:\AzFilesHybrid.zip”

Expand-Archive -Path “C:\AzFilesHybrid.zip”

Next you will need to change the script execution policy in your PowerShell environment. To do this run the following command > Set-ExecutionPolicy –ExecutionPolicy Unrestricted –Scope CurrentUser

Also if you don’t have the PowerShell module for Azure you will need to install it, do this using the command Install-Module Az –AllowClobber

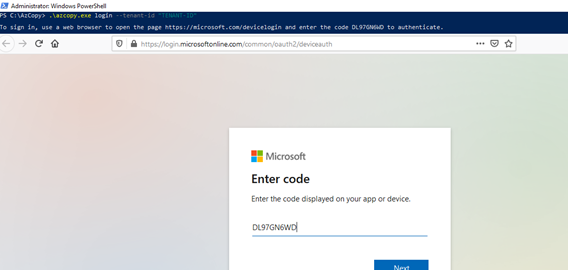

Now, you need to connect your Azure and select the correct subscription, do this using the command shown below.

In my example above I have only one subscription associated with this user, however if you have more than one you can use the Get command shown in the screenshot to select the correct one.

Finally, register the target storage account in Azure with your Active Directory environment by specifying the domain name, the domain account type (You can choose between computer account or Service Logon Account), and the target OU name where the service/computer account will be created:

join-AzStorageAccountForAuth -ResourceGroupName “<resource-group-name>” -Name “<storage-account-name>” -Domain “yourLocalADDomain.co.uk” -DomainAccountType ServiceLogonAccount -OrganizationalUnitDistinguishedName “ou-name-attribute-value”

After the above command you can also confirm on AD if the account has been created, and also run the following commands that is going to show you the storage account Kerberos key, the directory service of the selected service account and the directory domain information (If the storage account has enabled AD authentication for file shares).

$storageacccount = Get-AzStorageAccount -ResourceGroupName “<resource-group-name>” -Name “<storage-account-name>”

$storageacccount | Get-AzStorageAccountKey -ListKerbKey | Format-Table Keyname

$storageacccount.AzureFilesIdentityBasedAuth.DirectoryServiceOptions

Also update the password for the service account before the maximum password age is expired and then update the AD account password for the Azure storage account by running the following PowerShell command:

Update-AzStorageAccountADObjectPassword -RotateToKerbKey kerb2 -ResourceGroupName “<resource-group-name>” -StorageAccountName “<storage-account-name>”

Also if you prefer, you can set the password to never expire in AD.

The expected end result should be like the screenshots below.

Now the last step should be to grant access permission to the appropriate users and groups, an identity (User, Group or service account) must have the necessary permission at the share level. To allow access, Microsoft provides three built-in roles to grant share-level permission for users.

Storage File Data SMB Share Reader – Allows for read access to files and directories in Azure file shares. This role is analogous to a file share ACL of read on Windows File servers. Learn more.

Storage File Data SMB Share Contributor – Allows for read, write, and delete access on files and directories in Azure file shares. Learn more.

Storage File Data SMB Share Elevated Contributor – Allows for read, write, delete, and modify ACLs on files and directories in Azure file shares. This role is analogous to a file share ACL of change on Windows file servers. Learn more.

You can use the Azure portal, PowerShell or Azure CLI to assign the built-in roles to the Azure AD identity of a user for grating share-level permissions.

To assign an Azure role to an Azure AD identity, using the Azure portal, follow these steps:

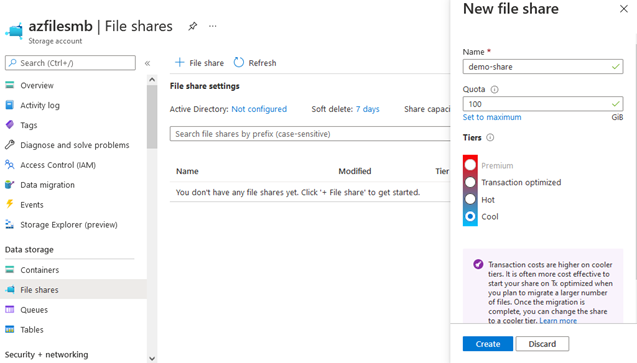

- In the Azure portal, go to your file share, or create a file share.

- Select Access Control (IAM).

- Select Add a role assignment

- In the Add role assignment blade, select the appropriate built-in role from the Role list.

- Storage File Data SMB Share Reader

- Storage File Data SMB Share Contributor

- Storage File Data SMB Share Elevated Contributor

- Leave Assign access to at the default setting: Azure AD user, group, or service principal. Select the target Azure AD identity by name or email address. The selected Azure AD identity must be a hybrid identity and cannot be a cloud only identity. This means that the same identity is also represented in AD DS.

- Select Save to complete the role assignment operation.

Now just test the access, if you did everything as mentioned here the result will be as follows:

That’s all for today guys, I’ll talk to you soon!

Joao Costa